Product Release: July 19, 2023

With much love, we have shipped several new features to improve the life of a Data Scientist or ML engineer using MarkovML. Feel free to share feedback about this release on our Slack community

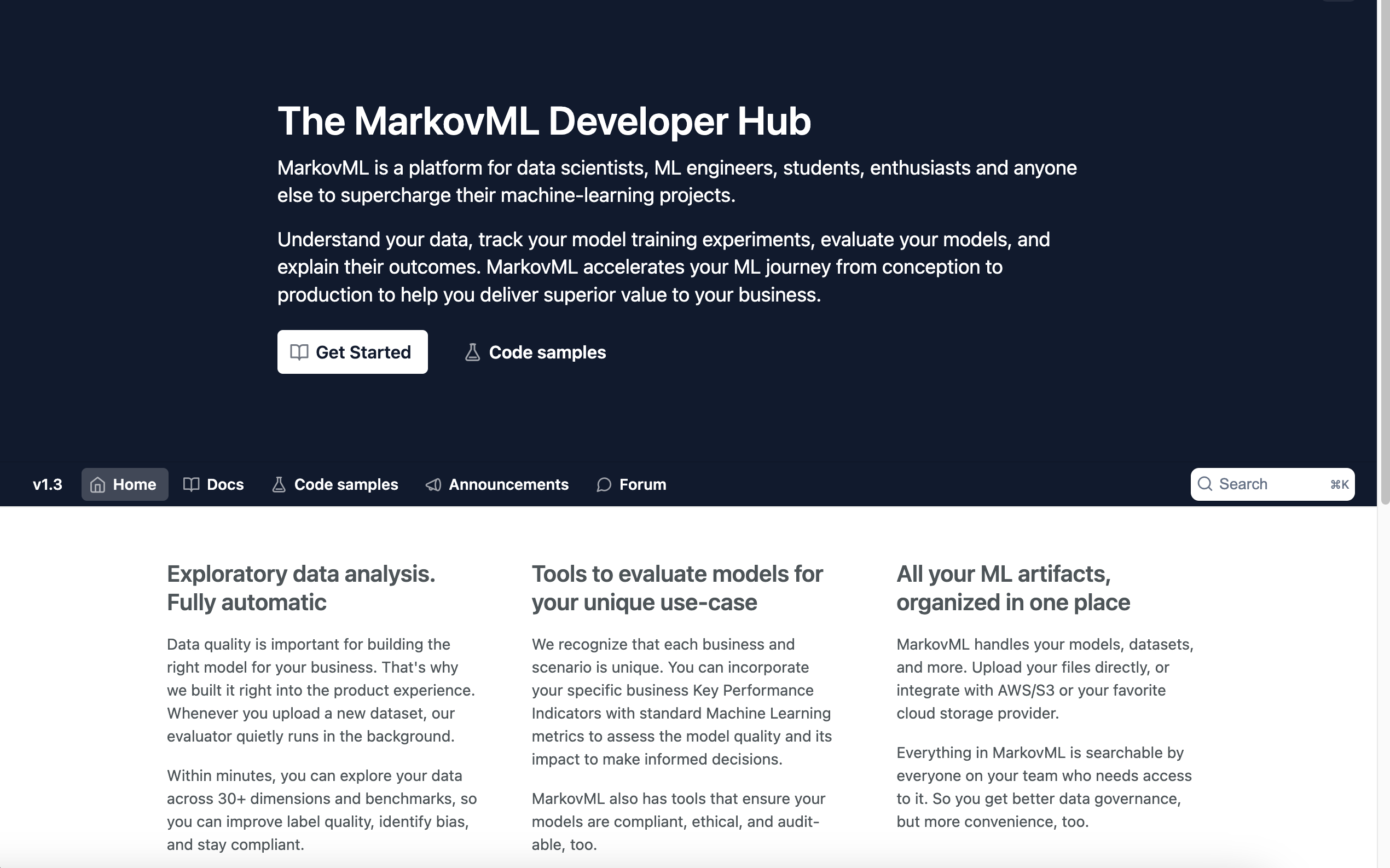

Brand New Developer Docs

We have migrated our developer docs to a new platform which makes it easier to navigate the content and provides additional features, like forums and announcements, to help us better connect with our users. We hope our users will find it much easier to get started with MarkovML and to learn how to use the product more effectively.

To view our new developer docs, visit: https://developer.markovml.com

Developer docs home page

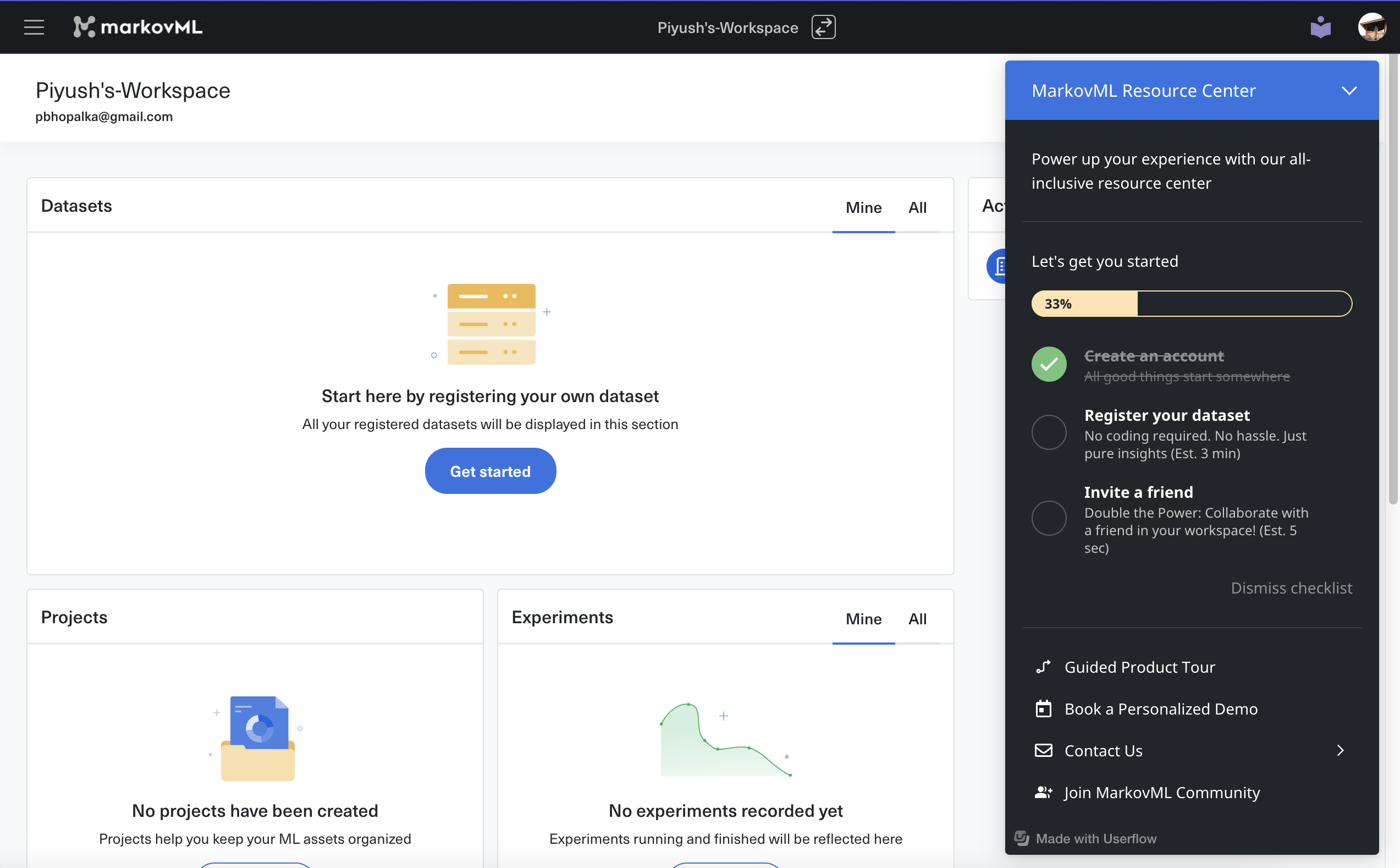

Launching MarkovML Resource Center

We are introducing a new Resource Center launcher (located in the bottom-right of the screen) with everything you need to get started with MarkovML. From the Resource Center you can view your "Getting started" steps, take a product tour, book a demo, or contact support. For example, if you find that some SDK sample code isn't working or some analyzer is failing, feel free to reach out to the team via the Resource Center.

Also, feel free to join our Slack community to get in touch with someone or follow what's going on.

MarkovML Resource Center for all the help you need

Improved Onboarding Experience

We have revamped our onboarding experience. By popular demand, we now allow new users to update their default username during the onboarding process. Feel free to choose whatever username you like when signing up.

New onboarding flow with ability to update username

Snippet Improvements

We have made some usability improvements to snippets.

Now when you are leaving a comment in a snippet, you will be able to mention other team members in the workspace as part of your comment.

Mention your team members in comments 🎉

Empty snippets now directly focus the cursor where you can start writing quickly. A snippet title is no longer required, so you can focus on the content draft (you can always add one later on).

A placeholder will appear on empty lines to indicate the current position of the cursor.

Improving the Empty Snippets screen

Experiments

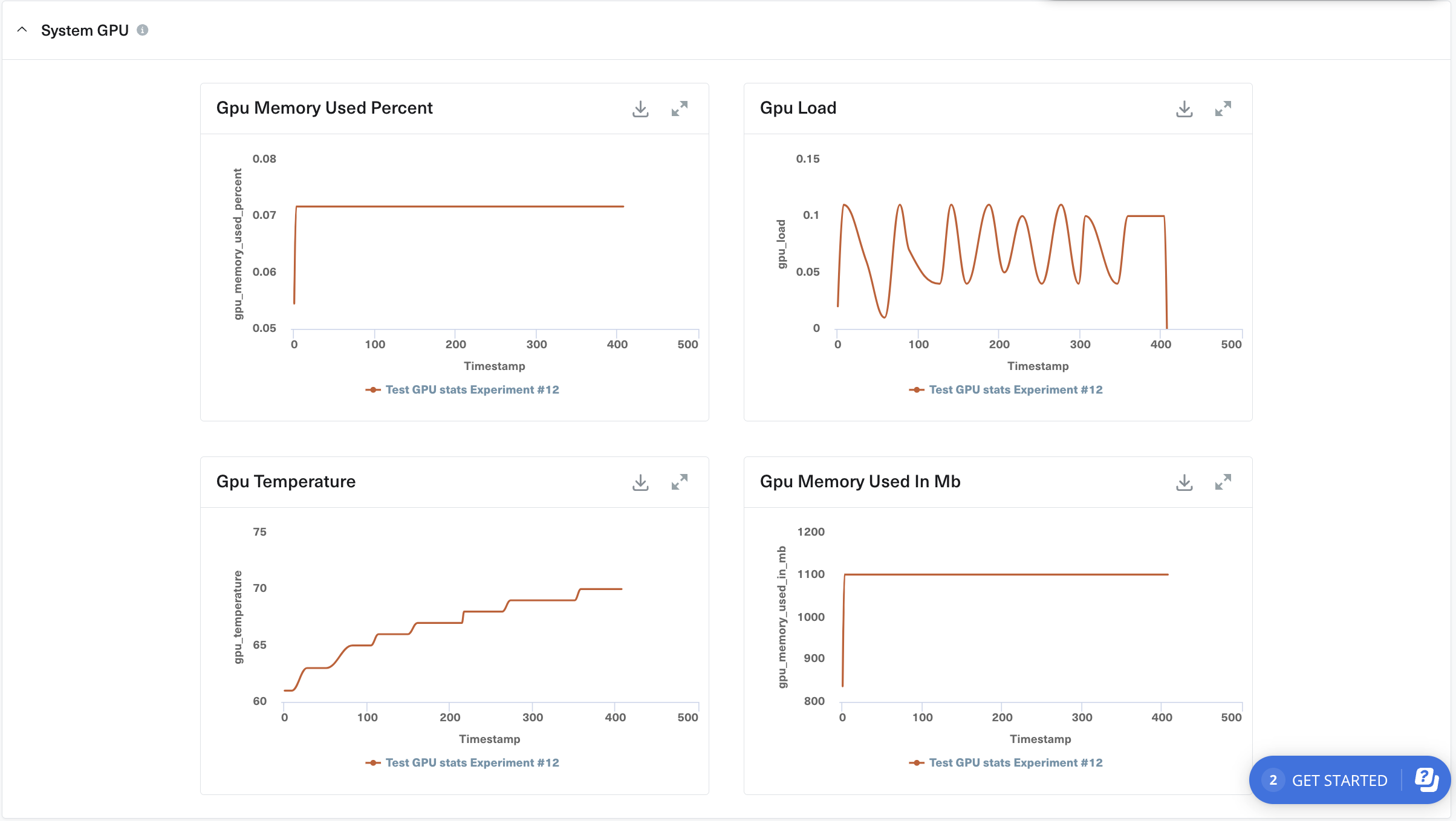

Now we support GPU utilization metrics for experiments. With this new capability, you can monitor and track GPU utilization during your experiments, providing valuable insights into how your models leverage GPU resources.

GPU utilization statistics on experiment comparison page

Model Evaluations

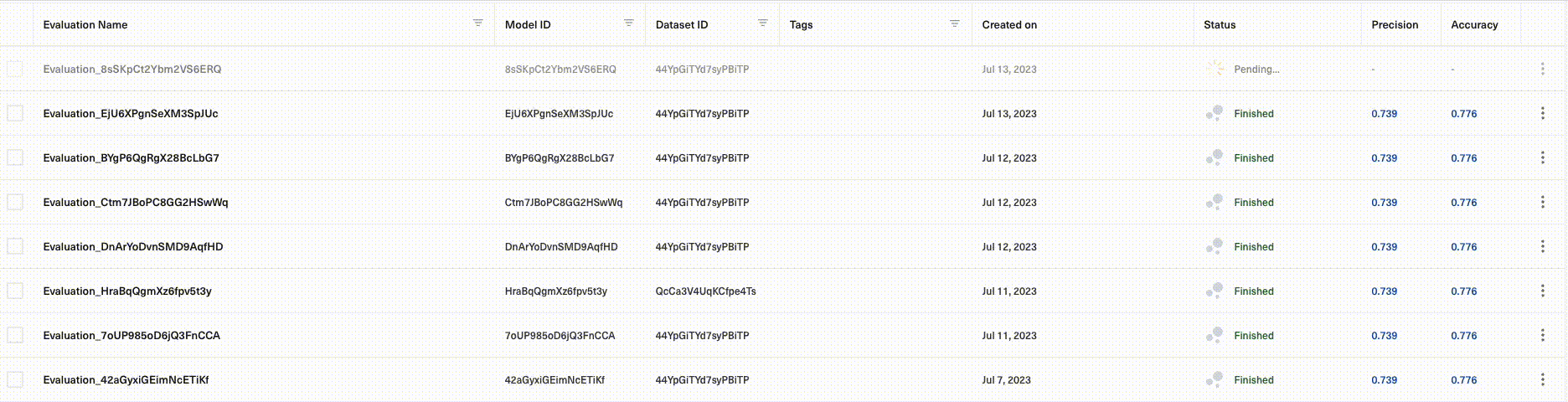

On the model evaluation list page, now you can conveniently view the various statuses of evaluations. This enhancement helps to surface important information about the status upfront.

Status of evaluation right on evaluation list page

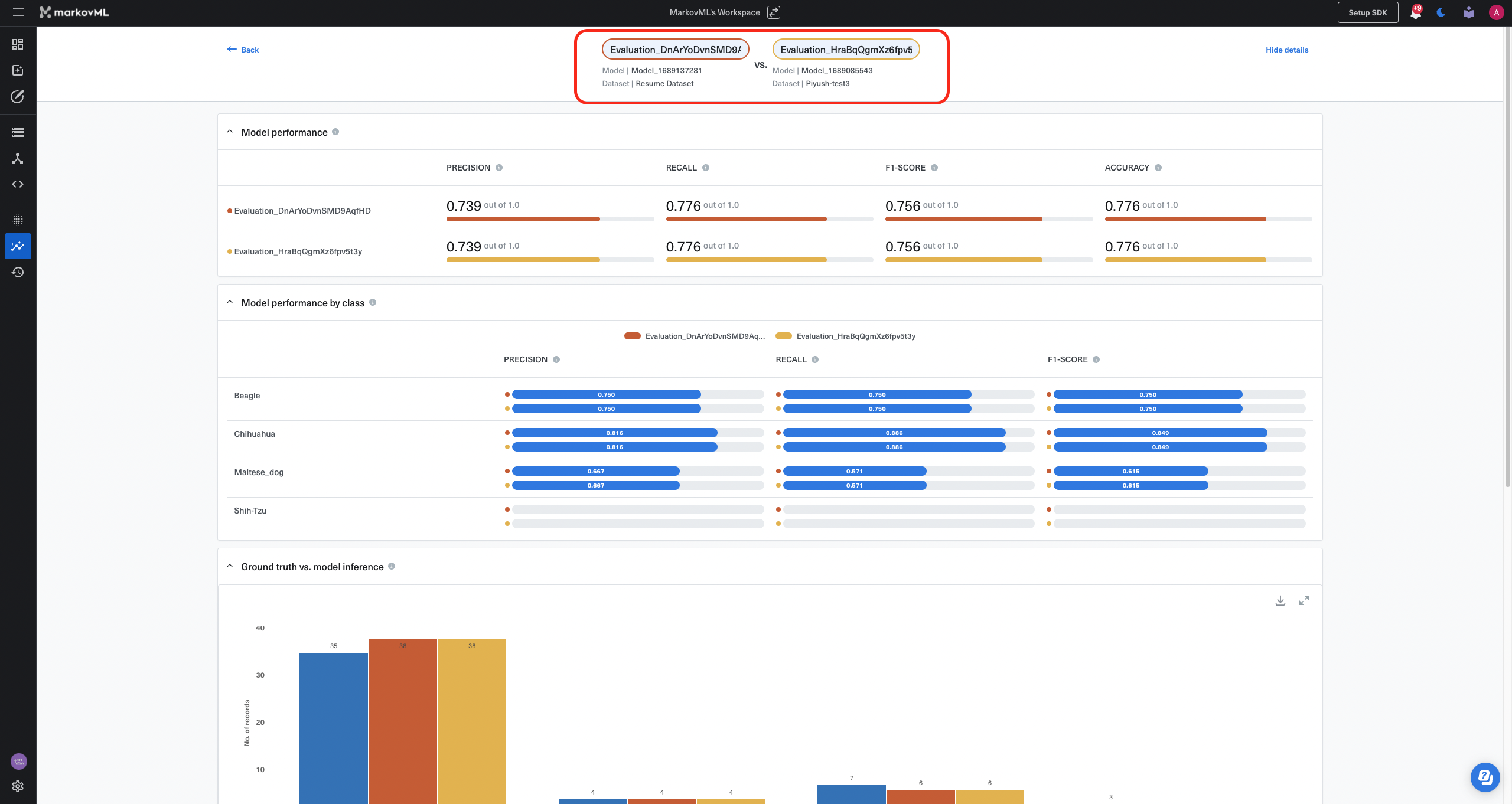

We now allow you to compare evaluations associated with different datasets. To provide a meaningful comparison, these evaluations must share the same labels and model classes and be linked to datasets registered with MarkovML. This enhancement adds flexibility and allows you to gain valuable insights by analyzing and contrasting the performance of related evaluations on different datasets.

Allow model evaluation comparison across different datasets